Cypress Cloud FAQ

General

What is Cypress Cloud?

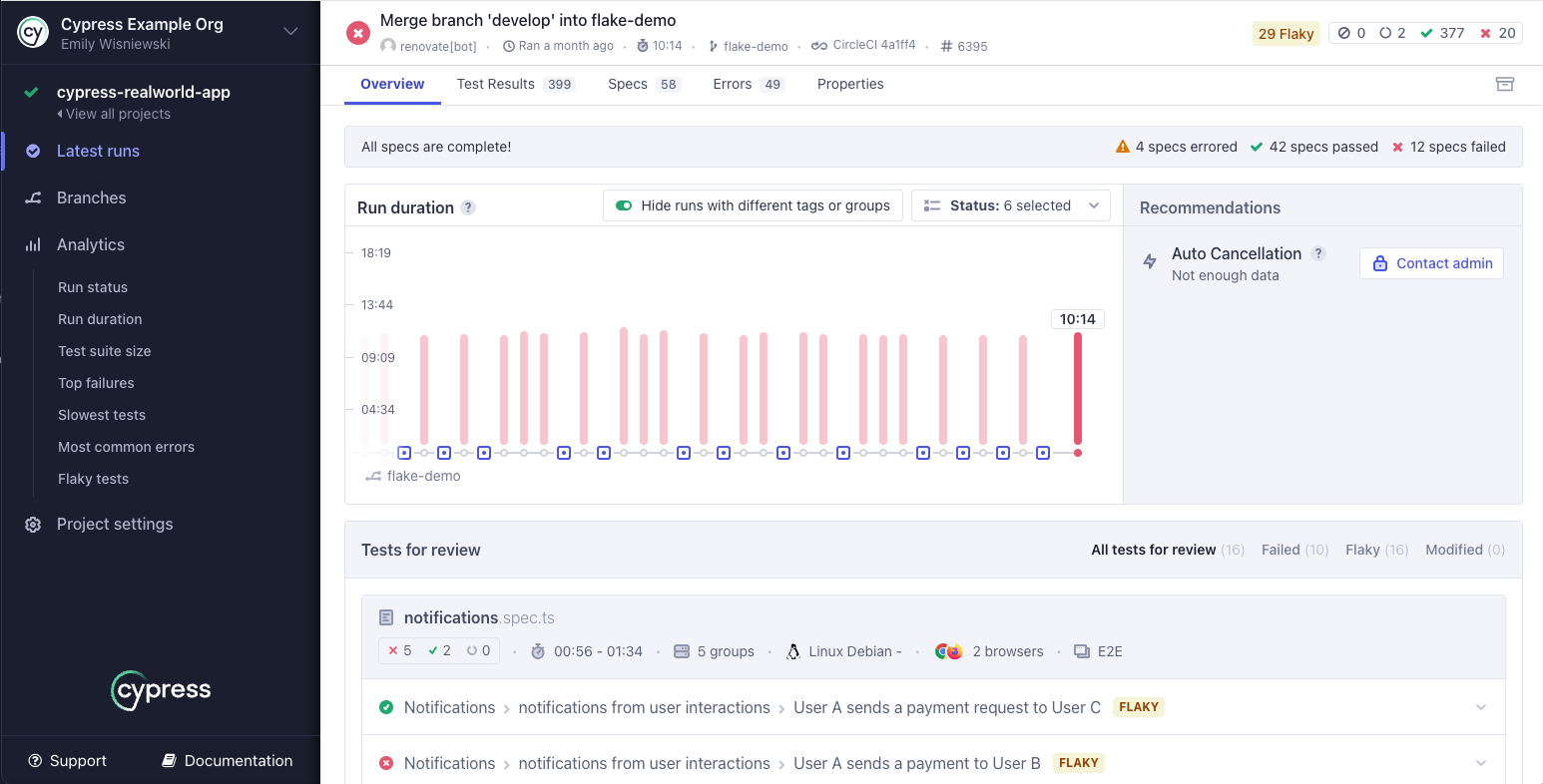

Cypress Cloud gives you access to tests you've recorded - typically when running Cypress tests from your CI provider - and provides you insight into what happened during your tests run.

You can read more here.

What does Cypress record?

Cypress captures the following:

When you run Cypress via cypress run passing the --record flag the following

data is sent to the Cloud for every run:

- Standard output in the terminal

- Test results

- Test definitions

- Cypress configuration (minus Cypress environment variables)

- Screenshots

- Videos

- OS environment variables related to CI and git information

You have the option to delete videos or screenshots before being sent to the Cloud so those are not captured.

When Test Replay capturing is enabled, the additional data outlined below will be sent to the Cloud. You can disable capturing this data.

- The rendered DOM and CSS styles for the application under test

- Cypress commands and events represented in the Command Log

- Network traffic within your application under test

- Browser console logs

Cypress Cloud does not capture anything related to the code of your application under test or the code within any associated repositories that are associated with your project.

How is this different than CI?

Cypress Cloud is complementary to your CI provider, and plays a completely different role.

It doesn't replace or change anything related to CI. You will still run Cypress tests in your CI provider.

The difference between Cypress Cloud and your CI

provider is that your CI provider has no idea what is going on inside of the

Cypress process. It's programmed to know whether or not a process failed - based

on whether it had an exit code greater than 0.

Cypress Cloud provides you with the low level details of what happened during your run. Using both your CI provider + Cypress together gives the insight required to debug your test runs.

When a run happens and a test fails - instead of going and inspecting your CI

provider's stdout output, you can log into

Cypress Cloud, see the stdout as well as

screenshots and video of the tests running. It should be instantly clear what

the problem was.

What counts as a test result?

Which pricing tier is best for you depends on the number of tests you record each month in your organization.

Tests are recorded when cypress run

is called with the --record flag while supplying the record --key. This

means your test run data is being

"recorded" to Cypress Cloud.

We consider each time the it() function is called to be a single test. So you

will generally have several tests recorded within each spec file and likely

several spec files within a single run. Only the

passed and failed tests

are counted. The pending and skipped tests are NOT counted.

You can always see how many tests you've recorded from your organization's Billing & Usage page within Cypress Cloud.

What counts as a user?

A user is anyone with a login to Cypress Cloud who has been invited to see and review the test results of your organization.

How much does it cost?

Please see our Pricing Page for more details.

What is the difference between public and private projects?

A public project means that anyone can see the recorded runs for it. It's

similar to how public projects on GitHub, Travis, or CircleCI are handled.

Anyone who knows your projectId will be able to see the recorded runs,

screenshots, and videos for public projects.

A private project means that only

users you explicitly invite to your

organization can see its

recorded runs. Even if someone knows your projectId, they will not have access

to your runs unless you have invited them.

What happens once I reach the test results limit?

Tests running with the --record flag will run as normal when the limit is

reached, but parallelization will be disabled and new test results will be

hidden from the dashboard until your plan is upgraded or a new usage cycle

begins. The usage cycle resets each month.

In order to avoid any interruption in service, we recommend that you review your usage and select a plan that satisfies your usage requirements. You can do this by:

- Logging into Cypress Cloud

- Select your organization

- Navigate to the Billing and Usage tab

- Review your organization's usage

- Scroll down and select Upgrade under your plan of choice

What happens if I downgrade my account?

Downgrading your account will not result in loss of access to Cypress Cloud.

However, it will make your Cypress Cloud account subject to the limitations of your new plan. For example, downgrading to the Starter plan will limit data retention to 30 days and test results to 500 per billing period.

Can I choose not to use Cypress Cloud?

Of course. Cypress Cloud is a separate service from the Cypress app and will always remain optional. We hope you'll find a tremendous amount of value in it, but it is not coupled with being able to run your tests.

You can always run your tests in CI using

cypress run without the --record

flag which does not communicate with our external servers and will not record

any test results.

Using Cypress Cloud

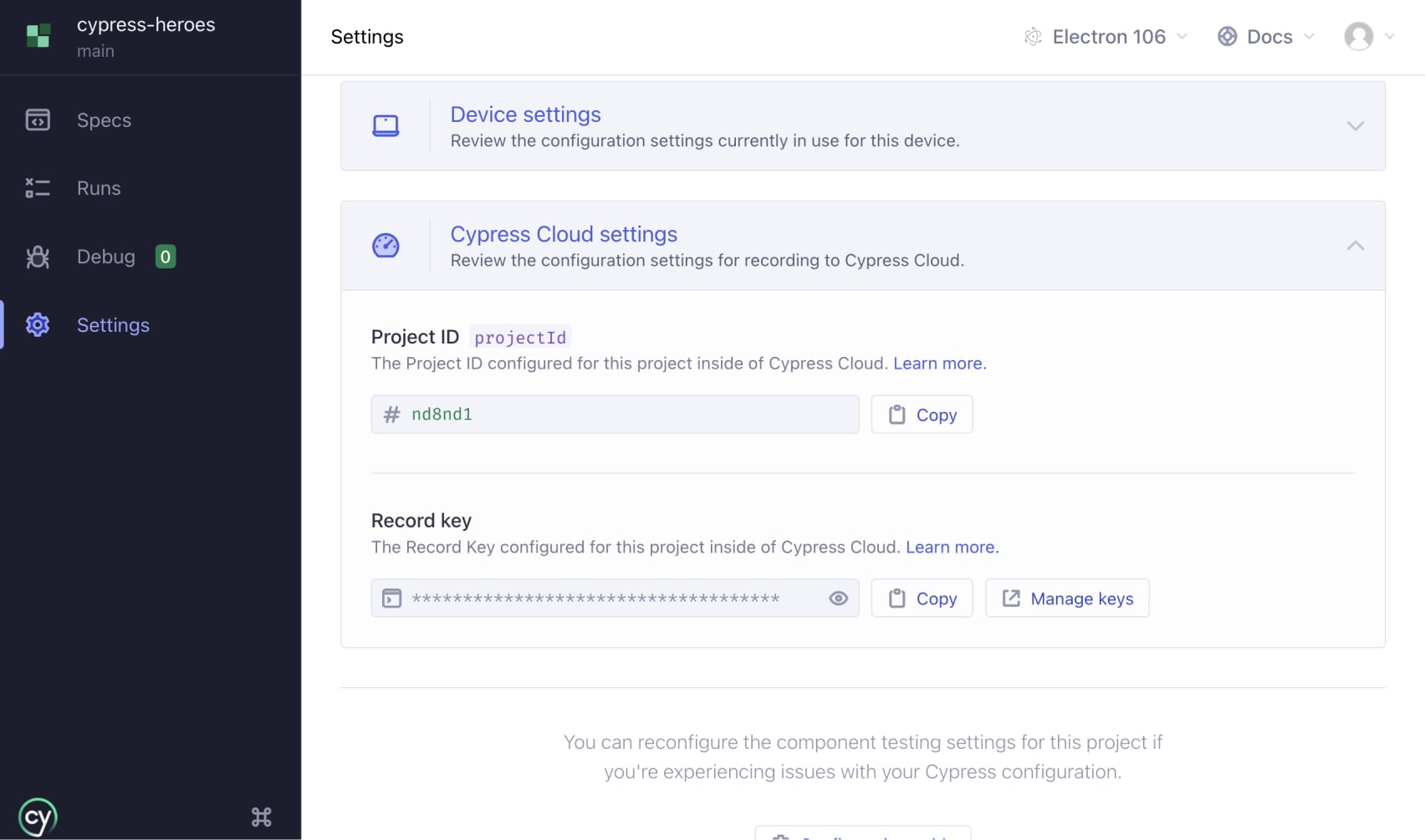

What is the projectId for?

The projectId is a 6-character string that helps identify your project once

you've set up your tests to record.

It's generated by Cypress and typically is found in your

Cypress configuration.

- cypress.config.js

- cypress.config.ts

const { defineConfig } = require('cypress')

module.exports = defineConfig({

projectId: 'a7bq2k',

})

import { defineConfig } from 'cypress'

export default defineConfig({

projectId: 'a7bq2k',

})

For further detail see the Identification section of the Cypress Cloud docs.

What is a Record Key?

A Record Key is a GUID that's generated automatically by Cypress once you've set up your tests to record. It helps identify your project and authenticate that your project is even allowed to record tests.

You can find your project's record key inside of the Settings tab.

For further details see the Identification section of the Cypress Cloud docs.

How do I record my tests?

- First set up the project to record.

- Then record your runs.

After recording your tests, you will see them in Cypress Cloud and in the Cypress App Runs tab.

Can I delete a run from Cypress Cloud?

You can archive a run so that it does not display in the runs list or in analytics.

Note: Archiving the recorded runs has no effect on the amount of tests recorded and counted as your usage billed for the month.

Can I host Cypress Cloud data myself?

No. A self-hosted version of Cypress Cloud is not available at this time.

Why is test parallelization based on spec files and not on the individual functions?

Cypress test parallelization is indeed based on specs. For each spec, Cypress scaffolds the new running context, in a sense isolating each spec file from any previous spec files, and ensuring a clean slate for the next spec. Doing this for each individual test would be very very expensive and would slow down the test runs significantly.

Spec file durations are also more meaningful and consistent than timings of individual tests, we can order specs by the moving average of the previously recorded durations. This would be much less useful when load balancing quickly finishes individual tests.

Thus, to better load balance the specs, you would want more spec files with approximately the same running duration. Otherwise, a single very long running test might limit how fast all your tests finish, and how fast the run completes. Due to starting a new test execution context before each spec file and encoding and uploading video after, making spec files run shorter than approximately 10 seconds would also be fruitless - because Cypress overhead would eat any time savings.

My CI setup is based on Docker, but is very custom. How can I load balance my test runs?

Even if your CI setup is very different from the

CI examples we have and

run with our sample projects,

you can still take advantage of the test load balancing using Cypress Cloud.

Find a variable across your containers that is the same for all of them, but is

different from run to run. For example, it could be an environment variable

called CI_RUN_ID that you set when creating the containers to run Cypress. You

can pass this variable via CLI argument

--ci-build-id

when starting Cypress in each container:

cypress run --record --parallel --ci-build-id $CI_RUN_ID

For reference, here are the variables we extract from the popular CI providers, and for most of them, there is some variable that is set to the same value across multiple containers running in parallel. If there is NO common variable, try using the commit SHA string. Assuming you do not run the same tests more than once against the same commit, it might be good enough for the job.

I'm working with a restrictive VPN. Which subdomains do I have to allow on my VPN for Cypress Cloud to work properly?

If you are running the tests from within a restrictive VPN you will need to allow some URLs so that Cypress can have effective communication with Cypress Cloud.

The URLs are the following:

https://api.cypress.io- Cypress API, Studio, cy.prompt()https://assets.cypress.io- Asset CDN (Org logos, icons, videos, screenshots, etc.)https://authenticate.cypress.io- Authentication APIhttps://capture.cypress.io- Cypress Test Replayhttps://s3.amazonaws.com/capture.cypress.io- Uploading Cypress Test Replay from Test Runnerhttps://cloud.cypress.io- Cypress Cloud, Studiohttps://docs.cypress.io- Cypress documentationhttps://download.cypress.io- CDN download of Cypress binaryhttps://on.cypress.io- URL shortener for link redirects

If you are using GitHub Enterprise or GitLab for Enterprise (Self-managed), you may also need to add the following to the version control IP allowlist:

3.211.102.119- Dedicated IP18.213.72.78- Dedicated IP35.169.145.173- Dedicated IP44.199.152.70- Dedicated IP52.70.95.89- Dedicated IP

Cypress Cloud Account

What is my Organization ID?

Your Organization ID is a unique identifier that is linked to your organization. Instructions on how to find your ID are in the Cypress Cloud Guide.

What if I can't access my Cypress Cloud account?

-

If you haven't signed up, see the Sign Up with an Invitation instructions.

-

Try resetting your password. If you never received the email, try the following:

- Try signing in with Google or GitHub

- Check the trash and spam folder

- Verify it's the correct email address

If the troubleshooting tips above did not resolve the issue or involves SSO, reach out to support.

Can I delete my Cypress account?

You can delete your Cypress account from your Cypress Cloud profile. Deleting your account cannot be undone! By deleting your Cypress account, all associated data in your account will be permanently deleted.

Test Replay

What is Test Replay?

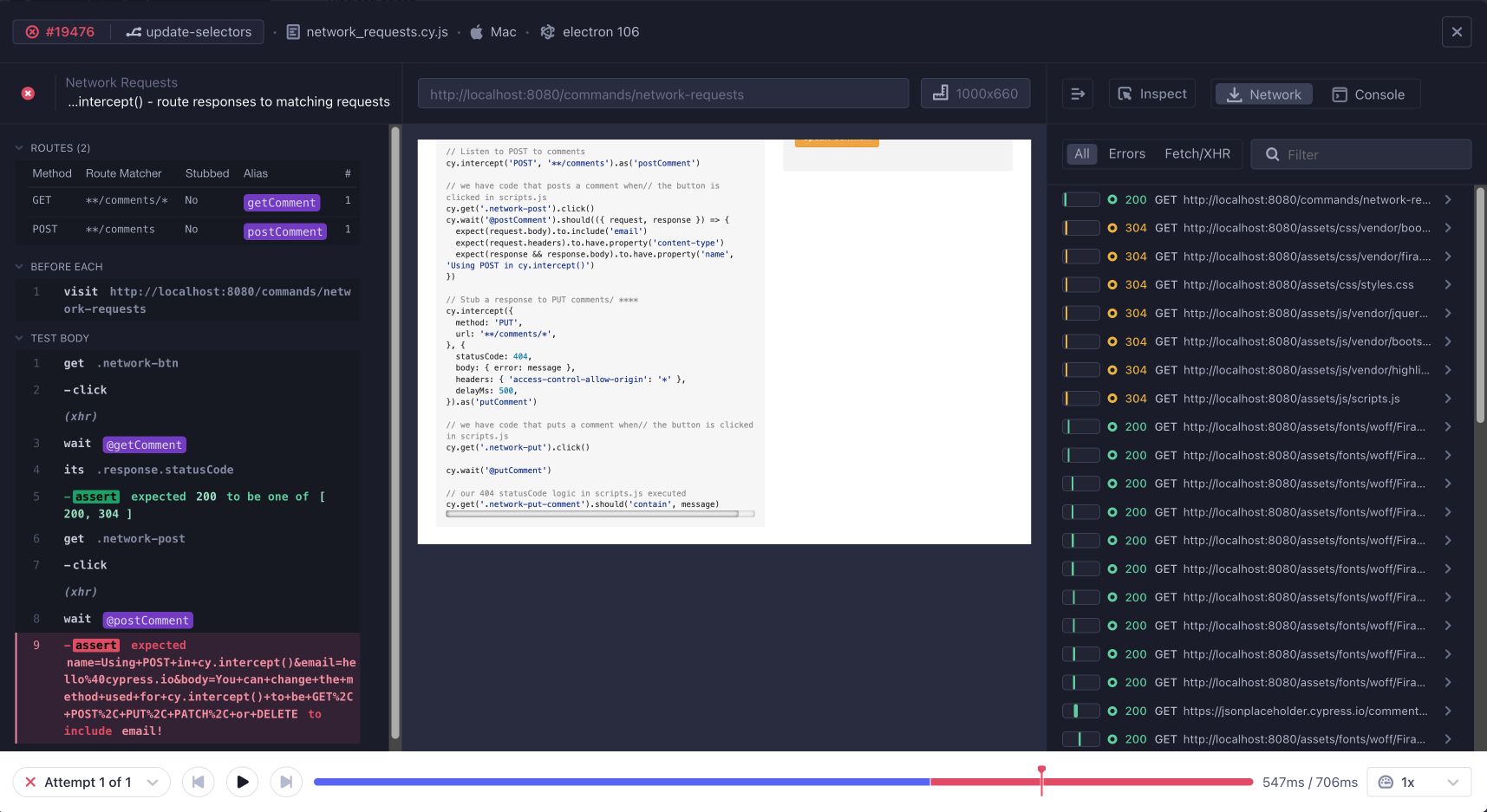

Test Replay enables you and your team to troubleshoot and debug failed tests faster. After updating to Cypress v13, the new feature will automatically record all browser events and allow you to “replay” what your application under test looked like.

Wind back the clock to any point in an application's test execution and directly interact with tests as they happened in CI. Debug complex problems as if you were there when they first happened. You can:

- Inspect the DOM at the exact time of a test failure to debug tests quickly

- View important debugging dimensions such as network requests, console logs, and more

- Save time recreating errors in CI locally - replay tests as they happened in CI

How is Cypress Test Replay different from other "replay" services?

There is an important distinction between session replay services (LogRocket, FullStory, DataDog, etc) and Cypress Test Replay. In session replay, user actions are captured as the application is used and delivered back in a replay-able format, usually video or stitched DOM snapshots. These are valuable tools for gathering product insight such as user behavior, per session.

Cypress Test Replay captures every detail of your test runs as they happen in CI. Remember, these tests are running in a headless manner on a virtual machine with no UI being rendered. Sometimes tests will error or fail indicating an issue in your application. Since Cypress Cloud is monitoring the health of your CI test suite, each Test Replay offers a chance to step back in time to analyze and leverage time travel debugging, network requests, console logs, JavaScript errors, and element rendering to address problems directly. This is incredibly valuable for developer and team productivity. No more hours wasted recreating CI issues on your local machine!

How much does Test Replay cost?

Nothing. It's free and included in all Cypress Cloud plans.

To get started with Cypress Cloud, sign up to start your 30 day free trial - including all premium Cypress Cloud features and plenty of test results to let you experience the power of Cypress Cloud!

Can I use Test Replay for tests recorded in different browsers?

Test Replay leverages Chrome DevTools Protocol(CDP), so currently supports Chromium-based browsers (Chrome, Edge, and Electron) only.

Test Replay would be disabled, with a message that it's only available on Chromium, for tests run in Firefox or WebKit (Safari). You can still record and capture test artifacts (screenshots, videos and CI logs) via other browsers in separate run groups.

Can I replay tests from historical Cypress Cloud runs?

Test Replay is available for tests recorded using Cypress v13 and up. Tests recorded prior to this will not have Test Replay enabled in Cypress Cloud. You will still have any artifacts collected during the pre v13 test runs.

Can I enable or disable Test Replay for specific, individual tests, or is it a global setting? Can I configure my settings so that Test Replay is only enabled for failing test retries?

At this time, users can only opt-out of Test Replay via project-level settings in Cypress Cloud. There is no local option for opting out at this time.

Can I share my test replays?

Yes! You can share Test Replays with any team members who have access to view your Cypress Cloud runs like QA, marketing, design, etc. Open the replay, copy the URL from the browser address bar and pass it along.

Will Test Replay write any assets to the file system?

Yes, but they are stored in a temporary directory and deleted after the run. There is nothing to .gitignore.

Is the network tab feature available exclusively in Test Replay?

All of the data within Test Replay's Developer Tools in Cypress Cloud (including network events) are already available to you when running tests locally in the Cypress app via your browser's built-in developer tools (e.g. Chrome DevTools). When reviewing a test run that occurred in CI, you can review the network events within Test Replay in Cypress Cloud.

Can I console.log() data in the console view of the Developer Tools panel?

Yes. This displays console logs from within the application under test or your spec files. You may need to use JSON.stringify(Object) to display deeply nested data.

Cloud AI ✨

What is Cloud AI?

Cloud AI is a collection of AI capabilities available in Cypress Cloud. These capabilities are continuously being added to the Cloud to improve the range of insights available in the Cloud and make it easier to accomplish tasks.

The current Cloud AI capabilities include:

- Test Intent Summaries - Understand what tests do without reading every command

- "At a glance" Error Summaries - Plain language explanations of what went wrong in your failed tests

- Premium Solution UI Coverage Test

Generation

- Generate tests directly from coverage gaps found during code reviews

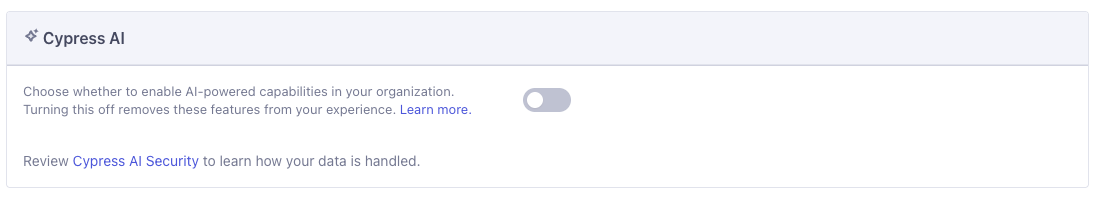

Are all Cloud AI capabilities enabled by default? How can I turn them off?

Cloud AI capabilities are enabled by default for all users on any Cloud plan, with UI Coverage Test Generation being limited to UI Coverage users.

Organization admins and owners can enable and disable the AI capabilities for their entire organization from their organization settings.

How much does Cloud AI cost?

Cloud AI capabilities are included at no additional cost on your existing plan:

- Test Intent Summaries - available in all Cloud plans

- "At a glance" Error Summaries- available in all Cloud plans

- Premium Solution UI Coverage Test

Generation

- included with UI Coverage

How is my data handled?

Review Cypress AI Security to learn how your data is handled.

To summarize:

- Your data is not used to train AI models

- All data is processed and stored in Cypress's managed Cloud infrastructure

- All information shared with AI models is session-bound

- Data is not shared between customers

- Personally identifiable information (PII) related to your Cypress Cloud account is never shared with the AI model

- Test content may be shared with AI models, and is your responsibility as the customer to make decisions about the appropriateness of data used in testing and will avoid the use of PII, PHI, or other types of protected information.

Does Cypress use my data to train models?

No, your data is not used to train models.